One more thing. I ended a bit abruptly a month ago, yet I recently came across two links that encapsulate the blog's preoccupations so fittingly that I cannot resist tying this last speculative bow.

The Wittgenstein scholar Peter Hacker explains that philosophy, unlike science, does not add to our knowledge of reality; rather, it examines the conceptual schemas through which we consider reality. Formal science is extremely successful in the relatively narrow task of documenting external reality, and it brooks no competitors; but arguably everything we most care about exists outside of science's purview. I particularly liked his comment that science yields an aggregate of facts that can be transmitted from generation to generation as a kind of epistemological bolus, whereas philosophy--like the arts--must be perpetually recreated.

Hacker also assails the prevailing scientistic fetish for neuroscience, arguing that from the point of view of real human priorities, it is the unified human agent that counts, not his or her brain and its myriad parts. "My amygdala made me do it" is not so different from "My soul made me do it." The moral self must take ownership of its concepts and its actions, not hide from them by ascribing them to the brain. Neuroscience may increasingly give us the capability to tinker more viscerally with our own experience, but this is nothing but the means to an ever debatable end. Science is nothing but a method, and one which can never identify the life most worth living. The latter can only be arrived at biographically and culturally, through lived experience, dialogue, and contingency. Everything that is not a fact exists in the vast penumbra of narrative.

Andy Martin looks at the overlap of autism and philosophy, arguing that both phenomena (endeavors? conditions?) involve a basic inability, or perhaps unwillingness, to fathom seemingly transparent communications. He suggests a tension between a philosophy that seeks to eradicate or solve conceptual confusions and one that accepts their inevitability. The latter is what always drew me to philosophy and to literature, which to me constitute the infinite project of outlining and marveling at the fundamental riddles of (inter)subjective experience. Consciousness is interesting not despite, but precisely because, imperfect understanding cannot be avoided. A philosophy or a science that proposes to eliminate conundrums is oppressive and must be resisted; a refusal to fully understand or be understood is a kind of assertion of freedom.

However, philosophy should not be sheer mystification. Language is the most powerful tool ever devised, and as such it can never be totally under our control; to some degree it always has a life of its own. Its spontaneous complexity is luxuriant and life-giving, as I have said, but it is well-known that metaphors can become stifling vines threatening to choke off light and space. Philosophy is fundamentally a linguistic pruning operation, lopping off conceptual excresences that threaten our narrative well-being.

Philosophical, that is, moral and aesthetic truths can never be as unambiguous as scientific ones, but they achieve a certain pragmatic objectivity because, well, human beings are so constituted that we need certain standards that are not lightly or trivially modifiable. Where does psychology fit? Like medicine, psychology derives from science a sense of realistic empirical boundaries of what may be technically achieved, but its aims must arise through personal and cultural narrative philosophy.

And that really is all I have to say for now.

Monday, November 22, 2010

Tuesday, October 19, 2010

Finis

"Now my charms are all o'erthrown,

And what strength I have is mine own,

Which is most faint."

Prospero

In the Times Judith Lichtenberg examines altruism, in particular the fact that it seems impossible to isolate pure unselfishness, uncontaminated by all self-interested motives (even if only the often unconscious satisfaction of having done good). But she argues that altruism is no less desirable, individually and socially, for all its imperfections; indeed, a flawed, all-too-human altruism is the best we can hope for in this world, that is, at all.

It seems to me that the wish for unsullied altruism is parallel to the fantasy of an absolute free will, untrammeled by ambivalence, weakness, or material considerations. The totally free and altruistic act would, of course, be the act of God, not of human beings.

That seems like a fine note upon which to end this blog, which has now run for more than two years and 400 posts. A blog has no natural ending apart from the demise or sheer exhaustion of its author. I find that I have said all that I have to say in this format, and nothing would remain here but the recycling of old themes and, of course, gawking at the baubles of the Web as they flash by. I have arrived at that definite point marked not by ambivalence or by frustrated block, but by dispassion--it is time to move on.

If Emerson was right that life consists of what a man (sic) thinks about all day, then this blog has been a reasonable record of the past two years of my life. Many posts have been tossed off, but many have been thoughtful, carefully wrought and even alarmingly personal, especially to any perceptive readers out there. It has been a transitional time, befitting a blog I suppose.

Other projects await. I will need to prepare for a Grand Rounds presentation a few months hence (a late echo of the academic life), and I am getting closer, finally, to starting a private practice, which will take considerable doing. Any additional post here in the future would be a link to a possible different kind of blog, a more professionally discreet and decorous one that might support a practice.

Thanks to readers--be well.

And what strength I have is mine own,

Which is most faint."

Prospero

In the Times Judith Lichtenberg examines altruism, in particular the fact that it seems impossible to isolate pure unselfishness, uncontaminated by all self-interested motives (even if only the often unconscious satisfaction of having done good). But she argues that altruism is no less desirable, individually and socially, for all its imperfections; indeed, a flawed, all-too-human altruism is the best we can hope for in this world, that is, at all.

It seems to me that the wish for unsullied altruism is parallel to the fantasy of an absolute free will, untrammeled by ambivalence, weakness, or material considerations. The totally free and altruistic act would, of course, be the act of God, not of human beings.

That seems like a fine note upon which to end this blog, which has now run for more than two years and 400 posts. A blog has no natural ending apart from the demise or sheer exhaustion of its author. I find that I have said all that I have to say in this format, and nothing would remain here but the recycling of old themes and, of course, gawking at the baubles of the Web as they flash by. I have arrived at that definite point marked not by ambivalence or by frustrated block, but by dispassion--it is time to move on.

If Emerson was right that life consists of what a man (sic) thinks about all day, then this blog has been a reasonable record of the past two years of my life. Many posts have been tossed off, but many have been thoughtful, carefully wrought and even alarmingly personal, especially to any perceptive readers out there. It has been a transitional time, befitting a blog I suppose.

Other projects await. I will need to prepare for a Grand Rounds presentation a few months hence (a late echo of the academic life), and I am getting closer, finally, to starting a private practice, which will take considerable doing. Any additional post here in the future would be a link to a possible different kind of blog, a more professionally discreet and decorous one that might support a practice.

Thanks to readers--be well.

Sunday, October 17, 2010

A Score of Scores

"Thus the whirligig of time brings in his revenges."

Twelfth Night

As mundane commemoration of this blog's 400th post, a few points on the infinite Web:

1. After I read this profile of Arvo Pärt, I went back and listened again to the wonderfully haunting "Tabula Rasa." It's spookily spiritual, scarily good, and perfect for Sunday Halloween this year. Music comes in two basic varieties: that which sets you in motion, and that which makes you more still.

2. The Atlantic on the unnerving unreliability of medical research. I have not been to a primary care physician in a dozen years, and barring any new or unusual symptoms, I hope to extend that streak far into the future (do not try this at home).

3. Melvin Konner on the likely primeval advantages of currently unfashionable distractibility and hyperactivity.

4. I happened to see three great local productions of Shakespeare comedies (Twelfth Night, As You Like It, A Midsummer Night's Dream) over the past two weeks. His comedies, entertaining though they are, are ultimately more disturbing than even the depths of Lear or the black hole of Iago because they show us the arbitrariness of erotic attachment. I was wondering why Macbeth wasn't making an appearance in the season of ghouls and goblins, but Viola, Olivia, Orlando, Rosalind, and Demetrius & Co. are finally more frightening than Lady Macbeth. Never look to Shakespeare for consolation--even the funhouse mirror does not flatter in the end.

5. The comedy club last night was uneven. Beyond a certain point, raunchiness is to true humor as bathos is to pathos; both are varieties of sentimentality, and failures of feeling. But okay for Saturday night.

Saturday, October 16, 2010

The Haunted Future

"An apple serves as well as any skull

To be the book in which to read a round,

And is as excellent, in that it is composed

Of what, like skulls, comes rotting back to ground."

Wallace Stevens, from "Le Monocle de Mon Oncle"

I've never gone in much for ghosts, but I'm reconsidering this after reading Leon Wieseltier's meditation on the presence of the unseen. He is writing about historical and cultural memory, but to be sure, there are myriad ghosts of the non-supernatural variety if we would just open our eyes and see them. Wieseltier writes, "Ghosts are the natural companions of estrangement; the invisible officers of tradition, of all the valuable things that have been declared obsolete but, in some stubborn hearts, are not obsolete. It is one of the fundamental properties of the human that the absent may be more significant than the present."

Humanity has always been locked in life-and-death struggle with its various ghosts. Monotheism sought to displace the ghosts of sky, sea, and mountain in favor of one great ghost-in-chief (of all absences, perhaps the one most present). The Enlightenment and modernity routed the fairies and ghouls of cave, dell, and stream. Perhaps the third great usurpation has been the perennial presentism of ubiquitous 24/7 Internet media, whose blinding glare renders the pre-millenial past ever more faint.

Memory, both personal and global, comprises legions of ghosts, as does the written word. Perhaps even the spoken word commemorates that which has passed--as Nietzsche wrote (and as the ever elegaic Harold Bloom was fond of quoting), "That for which we find words is something already dead in our hearts. There is always a kind of contempt in the act of speaking." In other words, we never quite catch up even to the present moment, much less the future.

I think that I have always had to work hard to free myself of ghosts. I have often felt like Frodo when the Ring was on his finger: reality dimmed and retreated and he found himself in a parallel or superimposed shadow world. At any given moment or situation, it is difficult to remind myself that "this, here is reality," for I know that "this, here" can only be the most miniscule excerpt of Reality, an atom in the universe. The ghosts vastly outnumber the living, beyond measure.

Internet media is interesting inasmuch as it locks us into a perpetual present, yet also displaces us from an actual present. The hordes of Blackberry and Facebook-checkers are not entirely "there," but they also are not haunted in any meaningful way; they are not afflicted by ghosts, rather, they and their living interlocutors are in a kind of Limbo. All virtualities are not created equal, and I prefer mine to have a history.

And then there are other specters, of alternative selves (and the persons-to-ghosts those selves would have encountered or even conceived) that occupy the paths not taken. There are ghosts of the future, those beings we think we or those we love might become. As James Surowiecki writes, procrastination can be a way of fending off or at least questioning such spirits.

Procrastination can be a manifestation of mere bewilderment or self-doubt, in which case it may help to break down a daunting or nebulous project into smaller, more concrete, and more practical stages. But as Surowiecki notes, procrastination may reflect a more basic instability in motivation, as identity is somewhat fluid and we can never be entirely sure that we will want tomorrow what we want today. He also reports--news flash!--that, believe it or not, human beings are ambivalent creatures beset with inner conflict (apparently economists and behavioral psychologists are just finding this out).

Inasmuch as it represents skepticism about distant, abstract goals in favor of more short-term rewards, procrastination may be a malady peculiar to modernity. Indeed there is a double-whammy here since complex societies demand deferred gratification at the same time that pleasurable and instant distractions grow more abundant. But there is a more fundamental existential issue. We often put things off because we are not yet sure of their value, and hope that the passage of time will clarify it, so that we can decide which among the plethora of "ghosts of the future" may become real.

Wednesday, October 13, 2010

Slacker Humanity

"I have a kind of alacrity in sinking."

Falstaff

The generally wise Theodore Dalrymple ponders the not-so-heroic motivations of Homo sapiens. His musings imply, to me, a few possibilities:

1. People often make the cardinal mistake of assuming that other people--whether of a different nationality, epoch, or faith--are very much like them. In fact, it pays to approach people as an anthropologist would, assuming nothing.

2. People are motivated by rather short-term factors operating in their local environments, which is why folks are far more worked up about, say, the economy than about such things as climate change or Afghanistan, which at this point represent relatively nebulous and distant threats.

3. Once people reach a certain level of comfort in their lives (and perhaps it isn't a very high level) they are significantly complacent about working harder. That may be why unemployed Americans stand by as undocumented immigrants take low-paying jobs and why the average American is not alarmed about China or India gaining some kind of competitive advantage.

4. As Dostoevsky's Grand Inquisitor claimed, people on average crave comfort and security more than freedom. Or rather, just as the alcoholic, deep down, wants not to stop drinking but to be able to drink without adverse consequences, most people want freedom without responsibility or the possibility of failure.

5. All of this is to say that humans are first and foremost mammals. We are energetic and ambitious except where complacency and the conservation of energy (often wrongly and pejoratively miscast as laziness) prevail, which is frequently.

Falstaff

The generally wise Theodore Dalrymple ponders the not-so-heroic motivations of Homo sapiens. His musings imply, to me, a few possibilities:

1. People often make the cardinal mistake of assuming that other people--whether of a different nationality, epoch, or faith--are very much like them. In fact, it pays to approach people as an anthropologist would, assuming nothing.

2. People are motivated by rather short-term factors operating in their local environments, which is why folks are far more worked up about, say, the economy than about such things as climate change or Afghanistan, which at this point represent relatively nebulous and distant threats.

3. Once people reach a certain level of comfort in their lives (and perhaps it isn't a very high level) they are significantly complacent about working harder. That may be why unemployed Americans stand by as undocumented immigrants take low-paying jobs and why the average American is not alarmed about China or India gaining some kind of competitive advantage.

4. As Dostoevsky's Grand Inquisitor claimed, people on average crave comfort and security more than freedom. Or rather, just as the alcoholic, deep down, wants not to stop drinking but to be able to drink without adverse consequences, most people want freedom without responsibility or the possibility of failure.

5. All of this is to say that humans are first and foremost mammals. We are energetic and ambitious except where complacency and the conservation of energy (often wrongly and pejoratively miscast as laziness) prevail, which is frequently.

Tuesday, October 12, 2010

Real Happiness

"Hope is itself a species of happiness, and perhaps, the chief happiness which this world affords."

Samuel Johnson

Happiness has been a hot and trendy topic in psychology for a while now. Philosopher David Sosa explores the concept by contrasting it with the experience of mere pleasure; using Robert Nozick's famous "brain in a vat" thought-experiment, he argues that happiness must consist in "human flourishing," which, while somewhat question-begging, does imply that human beings finally crave reality as a field of action, and not merely the kind of virtual subjectivity provided by, say, drugs (or of course the Internet, which is now a more plausible instance of virtuality than Nozick's 1974 chemical one). We stand in deep need of a real external world; the solipsist may think he is happy but he is not.

Sosa's argument suggests that happiness may be more objective than subjective, that is, an individual may not be the best judge of his own happiness. And the degree to which we can control our own happiness is forever in question. According to ancient Stoics and Zen Buddhists, we have the capacity to manage our own consciousness to the point of invulnerability to external accident. But skeptics of many stripes have disagreed, claiming that grave losses or hurts may seriously impair happiness.

Perhaps, to paraphrase Shakespeare's Malvolio, some are born happy, some achieve happiness, and some have happiness thrust upon them; happiness is some kind of interaction of temperament and luck. As today's illustration suggests, I happened to be in Washington, DC over the weekend. Surely George Washington led one of the happiest of lives, and not because he was consciously exultant: a middling Virginia planter and mediocre military man, his character coupled with happenstance propelled him to a truly charmed position in an insurgency apparently destined for greatness.

Happiness is not only not congruent with pleasure--it may be compatible with considerable suffering. Did Abraham Lincoln have a happy life? While he was a jovial sort at times, and an ambitious man who surely knew his own magnitude, he also suffered grim depressions, presided over national mayhem, and died grievously. And yet the scale of his moral achievement, as well as his lasting status as the most beloved of presidents, confers an indisputable happiness upon his life, just as the verdict of history renders Mao Tse-Tung's an unhappy life even if the tyrant died serenely at peace.

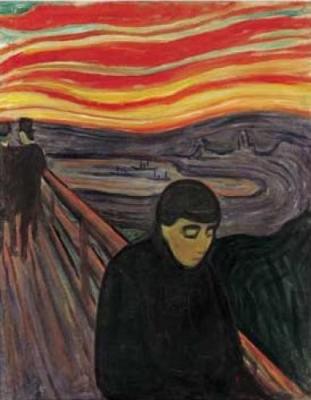

Is depression consistent with happiness? I think it depends on severity in a way that is comparable to the experience of pain. Many with chronic, low-grade distress, whether emotional or somatic, may achieve a certain detachment from their affliction that affords scope for happiness. Similarly, episodes of severe melancholy or pain, when transient, may be "happily" endured. But depression is unique among maladies in that a sense of hopelessness is itself a cardinal symptom, making it one of the chief obstacles to happiness.

Sunday, October 10, 2010

Yellow Bile

Guildenstern: The king, sir --

Hamlet: Ay, sir, what of him?

Guildenstern: Is in his retirement marvelous distempered.

Hamlet: With drink, sir?

Guildenstern: No, sir, rather with choler.

After an email I sent about a mutual patient complaining of irritability, her wise therapist commented to me on how many more patients she had seen with anger issues in recent years. She wrote, "My belief is that we are witnessing a 'cultural disorder,' with skewed attachments, a sense of entitlement, a lack of accountability, and a crisis of conscience." I too have been surprised by how many patients present with not only dysphoria, but with barely contained annoyance over the conditions of their lives.

Considering how many patients present with symptomatic behaviors of rage episodes and "going off on people," anger per se is surprisingly uncommon as a cardinal diagnostic symptom in psychiatry. As always, it all depends on context. General irritability may characterize depression, mania, or ADHD. Men in particular seem to react with defensive rage when threatened by anxiety. Borderline, narcissistic, and antisocial personality disorders often involve an inability to modulate indignation and temper.

A Times article discusses the occurrence of bullying at ever early ages (think Kindergarten), attributed speculatively to controlling, snarky parents as well as a general media culture valorizing materialism and mean-spiritedness. After several decades of sociologists decrying the disconnectedness, narcissism and entitlement of up-and-coming generations, are we seeing the fruits in an increasingly thin-skinned populace, in both clinical and political terms? Is resentment mutually amplified by the man on the street, virtual and media alter egos, and the much vilified political establishment? Indignation and claims of victimization are everywhere and are thereby cheapened.

Tuesday, October 5, 2010

Is It Depression?

"When I use a word," Humpty Dumpty said in a rather scornful tone, "It means just what I choose it to mean--neither more nor less."

Lewis Carroll

I took the title of this post from a drug ad I saw today, a question that, contrary to its originator's intent, yields no clear answers. What kinds of answers does someone seeing a psychiatrist seek, and will she get them? (For complex and controversial reasons, it is epidemiologically more likely to be a she, although that leaves plenty of he's too).

Someone seeing a doctor for chest pain wants to know two main things: one, is this a potentially mortal threat, and two, what can be done for it? The cardiologist can resort to a number of physical exam findings and (more likely these days) tests to answer these questions. What is at issue is: what underlying biological process does the pain reflect?

The psychiatrist's function is not much like this. If presented with someone with depressive symptoms, it is true that there are occult medical syndromes (such as, say, hypothyroidism, vitamin B-12 deficiency, or pancreatic cancer) that could be responsible, but these etiologies are vastly outnumbered by idiopathic depressions. The patient may want to know: is this caused by a "chemical imbalance," or by relationship problems, or by a history of abuse? One may speculate or construct a narrative around this, but is impossible to know for sure.

So if a psychiatrist is usually unable to identify underlying pathophysiology, what can he/she provide? Context. A large part of psychiatry is the proper use of the sick role--people present with ambiguous symptoms that are often the target of stigma in the community at large, and the question is: am I merely weak, or am I losing my mind, or is something else going on? While the psychiatrist has limited appeal to diagnostic tests, he can call upon wide experience with persons exhibiting similar symptoms (for this reason, it is extraordinarily scary to be a neophyte in psychiatry, because one has neither firm science nor experience as backing, only clinical supervision).

The granting of the sick role and the understanding and compassion involved can be quite powerful. The psychiatrist "mans" the gateway of mental disorder, conveying seemingly contradictory messages: you are merely human and therefore vulnerable like the rest of us, and so not beyond the pale, yet to a greater or a lesser degree you are more impaired than the average person. Beyond this, there is really only management of symptoms, as I have written before, in the way that a pain specialist manages symptoms. This may take the form of dynamic understanding, or cognitive reframing, or medications, but none of these is directly treating a clear-cut disease process.

In other words, when someone presents saying "my chest hurts," the appropriate next questions are, "What is really wrong with me and how can it be fixed?" When someone presents with "I am depressed," she has usually diagnosed herself. There is a sense in which one cannot be mistaken about one's own depression any more than one may be mistaken about being in pain (subjectivity prevails here). The questions that follow are: "How does my experience compare with others you have encountered; is there hope for me; and how can this be managed?"

Identity Crisis

Christine O'Donnell has a new ad in which she not only distances herself from witchcraft, but also boldly (and baldly) asserts, "I am you." Really? So politicians are now inserting themselves not only into my living room but into my very psyche? Obviously she meant that she is like me or shares my values (which she doesn't), but the difference between simile and metaphor is significant here. I'm spending the morning repairing my boundaries.

Monday, October 4, 2010

Mad Scientists at Work

"I have neither the scholar's melancholy, which is emulation; nor the musician's, which is fantastical; nor the courtier's, which is proud; nor the soldier's, which is ambitious; nor the lawyer's, which is politic; nor the lady's, which is nice; nor the lover's, which is all these: but it is a melancholy of mine own, compounded of many simples, extracted from many objects, and indeed the sundry contemplation of my travels, in which my often rumination wraps me in a most humorous sadness."

Jacques, As You Like It

Most psychiatrists can't go a week without hearing the "guinea pig" comment from a patient alarmed by the all-too apparent imprecision of the enterprise. Problem is, it would be bad enough if treatment were up in the air; the reality is that diagnosis itself is often in flux. Two links--Mitchell Newmark, M.D. at Shrink Rap and Joe Westermeyer, M.D. in the green journal--illustrate nicely the yawning gulf between theory and practice when it comes to the art of the shrink.

Patients (and insurance companies) often crave DSM-type diagnosis for the sake of clarity, but such categories often do not usefully guide treatment. Both psychotherapeutic and biological interventions, strangely, can be both more general and more idiosyncratic than by-the-book diagnoses would suggest. After all, many of the most basic psychotherapeutic stances--Rogerian acceptance and cognitive reframing just to name two--apply across numerous diagnoses. The same may be true of medications--"antidepressants" are used to treat not only depression, but multiple anxiety disorders as well as eating disorders.

In this sense, two seemingly contradictory propositions may be said to be true: every case of depression is alike, and no two cases of depression are alike. The former may as well be the case when it comes to biological treatment, or rather, it is merely the case that depression exists on a spectrum of severity which dictates the aggressiveness (but not the basic type) of intervention. But it is just as true that when it comes to the fine-tuned approach to the patient (including, but not limited to, formal psychotherapy), myriad developmental and personal variables guide treatment far more than DSM diagnosis. Another way of putting this is that despite decades of attempts to make the DSM more specific, individuals within a category (whether schizophrenia or borderline personality disorder) are still more different than they are similar.

I think of evaluation and treatment as situated among three axes: severity, symptoms, and idiosyncratic history. The most basic question is: how impaired is the individual, and what extremity of intervention is called for? The first issue, whether evaluation or treatment is required at all, has already been answered, by the patient or someone close to him/her, by the time the clinician is on the scene. The second issue is whether biological intervention is likely to be helpful. In select cases, the third issue is whether inpatient or residential treatment is indicated.

Individuals are driven to treatment by symptoms, and once it is decided, if it is decided, that biological intervention is appropriate, it is shaped by symptoms more than by diagnoses. Yes, there are a few major categories helpfully kept in mind--primary psychotic disorder, depression/anxiety, bipolarity, substance abuse, and ADHD (or other cognitive impairments)--but those suffice for general formulation so far as biological treatment is concerned. When it comes to general and psychotherapeutic approaches, the unique idiosyncrasy of the patient is the chief guide of treatment.

As Dr. Newmark points out in his post, psychiatry remains profoundly different from the rest of medicine, where diagnosis is everything, in this respect. If a patient presents with chest pain, it is supremely important to know whether it is due to a heart attack, aortic dissection, bronchitis, pulmonary embolus, gastroesophageal reflux, or costochondritis, because each of these calls for clearly distinct treatments. Psychiatry is not like that. Deciding whether a person's diagnosis is depression, bipolar disorder, or schizophrenia will suggest moderate differences in treatment, but the latter will derive more from specific symptoms and personal background. This goes to show that psychiatry remains far more art (or "art") than science. The research-powers that be have yet to persuade the practitioner otherwise.

Monday, September 27, 2010

Doctor Death

"Will you tell us when to live, will you tell us when to die?"

Cat Stevens

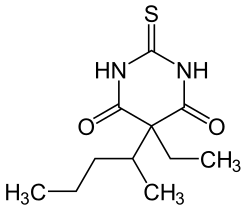

The execution of Albert G. Brown, Jr. in California has been delayed not only because of legal concerns, but also due to dwindling supplies of sodium thiopental. In my eight years of doing ECT we often had to switch back and forth between methohexital and thiopental (both barbiturates) because for whatever reason national supplies recurrently ran short. Why I wonder?

I remember that the morning of Timothy McVeigh's execution some years ago was an ECT day, and incredibly, the anesthesiologist involved (not one I usually worked with fortunately) commented before the patient was asleep that pre-ECT drugs and lethal injection drugs are similar (big differences: ECT involves supplemental oxygen and therapeutic effect; execution, not so much). When it comes to surgical types, the stereotypes are generally true.

The article mentions, to morbid and comic effect, the assurances of the spokesperson of California's Department of Corrections that adequate thiopental was available "to stop Mr. Brown's heart." Absurdly, the drug's maker then objected that its product was not "indicated for capital punishment."

Capital punishment is ultimate faith in the power of the state, which makes the conservative case for it puzzling. Execution is misguided for the same reason that suicide is misguided--in both cases death's finality overlooks the perennial possibility of human errors of judgment, whether of culpability or of the value of one's own life.

In any case, making execution into a quasi-medical procedure is a mockery. If we will celebrate death and its deterrent effect, let us haul out the gallows and the guillotine.

Becoming Who You Are

Sean Wilentz's Bob Dylan in America (excerpt here from The Daily Beast) is a worthy review of that force of nature, offering not a comprehensive examination (which will some day run to many volumes), but a series of deep core samples, as it were, taken from representative phases of Dylan's tortuous and protean career.

Dylan's story is a half-century version of Seinfeld's "show about nothing," that is, he is the trickster irrationalist, the artiste supreme, resisting every categorization. He is about nothing but sheer exuberant creativity, following his own quiddity, throwing off songs as a fire throws off sparks. For him there is only history, human nature, and the music driving the flower through the green fuse. He speaks endlessly but does not answer questions (as such, he is a kind of ultimate counter-example to psychiatry, or perhaps rather a competitor, the great blank screen and arch-therapist).

In parallel, D. G. Myers at A Commonplace Blog neatly identifies the dilemmas of religious toleration vs. tolerance. A deeply religious man to judge from his writing, he points out that all theological justification is logically circular, and there is no arguing first principles; in a process that apparently remains mysterious, one finds oneself either inside or outside of a belief system. So religious toleration is the recognition that one has nothing to fear from other faiths outside of coercion and violence.

What do Dylan and religion have in common? For me, it is the realization that there is finally nothing outside of nature, attachment, and seduction. I mean the latter not in any manipulative sense, but in the sense that not only art, but also persuasion and reason, are attempts to beckon others hither, to say, "Look at this wondrous state of being, if only it could be realized."

Why This Psychiatrist Isn't Practicing Psychotherapy

In expectation of their forthcoming book, the Shrink Rap folks did a post soliciting inquiries about psychiatry. Predictably, among them was: why aren't more psychiatrists doing psychotherapy? There are a number of ways to answer this, the simplest and least sophisticated being: shrinks are increasingly co-opted by Big Pharma and choose big bucks over introspection and integrity. That happens, of course, but it isn't the whole story.

Another way of looking at it is just the division of labor. People tend to get better at what they spend a lot of time doing. In recent decades huge numbers of psychologists and social workers entered the therapy arena, and not only do they often do therapy as well as a psychiatrist could--often they do it better. Why don't internists offer physical therapy, or detailed nutritional counseling? Because there are specialists who offer those services. Yes, they do offer them somewhat cheaper than an internist could or would offer them, but the more important point is that those specialists get really good at what they do.

To assume that psychiatry without formal psychotherapy (of the explicitly defined, 50-minute variety) is nothing more than pill-pushing is a warped and shrunken view of the medical role. The medical dimension of psychiatric practice has its own healing frame and ritual, the management of which calls for nuanced understanding of human nature and diagnosis; that is, psychiatry should offer a unique professionalism. Psychiatry without psychotherapy should not be confined to the peddling of antidepressants any more than internal medicine without physical therapy or nutritional counseling should be defined by the peddling of muscle relaxants or oral hypoglycemics.

As Freud himself believed, an M.D. after one's name does not endow one with unique therapy skills. As a psychologist reminded me years ago, people tend to do what they are trained to do. And as a commenter responded to a previous post on this topic (I can't seem to find it, so I paraphrase), people tend to practice what they believe. That is somewhat limiting (there are a lot of things I believe in more than in psychiatry, but it is necessary to pay the bills), but more or less true. I practiced ECT for years, but I don't "believe in" ECT more than in therapy. There is also, crucially, the matter of personal fit.

There are a lot of good therapists out there, and there are a lot of primary care physicians able to offer an SSRI (for better or worse) for transient or mild conditions. But people seem to have a hard time finding intelligent psychiatrists to offer, if nothing else, prognosis and understanding if typical treatments don't seem to work. One can have and apply a knowledge of the history, sociology, and philosophy of mental disorder without feeling the need to provide formal psychotherapy. I have done the latter in the past, and perhaps I will do it again, but for the time being it is more interesting in the abstract than in actuality.

Wednesday, September 22, 2010

The Solitary

"In a soulmate we find not company but completed solitude."

Robert Brault

Briefly, The Atlantic features a story on Donald Triplett, the now 77-year-old who was the original child diagnosed with autism by Leo Kanner. After him, I suppose, le deluge.

One can only marvel at the complexities of this diagnosis, which rival those of schizophrenia. In both cases, is this a diagnosis unique to modern times? In contrast to mood disorders, which have demonstrably always been with us, it is difficult to find clear traces of schizophrenics and autistics in the historical record. Did they merely elude the spotlight of history, ekeing out obscure lives in remote farms or urban hovels? Or is some relatively recent pathogen, toxin, or environmental poison at work?

Does autism represent merely aberrant wiring, the vulnerability of a vastly complex process to contingent errors? On the face of it, autism (like schizophrenia) would seem likely to have caused a major fitness disadvantage to our ancestors (Donald in the article lacks not only offspring, but any history of girlfriends). Or does autism merely represent the severe form of gene associations that in milder forms may have generated unusual social respect in evolutionary times past? And as with ADHD, autism has no place to hide in hyper-social, hyper-competitive societies. Anxious suburban settings strive to emulate the more relaxed small-town acceptance and support enjoyed by Donald Triplett. One could also ask, inasmuch as he does not appear to be unhappy, whether he is "disordered" at all.

Monday, September 20, 2010

America's Most Wanted (Doctors)

The dogs may bark, but the caravan moves on. Just when I had carried my graduate school application to the mailbox (do they still accept paper?)--I heard the market for Wallace Stevens studies is strong--I see that, according to an update in Psychiatric Times, I may not want to give up my day job quite yet.

It is striking that as many critics deride the profession and its tools, its real-world prospects grow apace. Indeed, the treatment of children, now most controversial, is precisely where the best jobs are. Why I wonder? Pharmaceutical behemoths stoking demand? Post-imperial, recessionary American malaise? Perhaps it is also related to the increasing pressure on primary care doctors, who just can't handle the huddled masses of the unhappy.

It is striking that as many critics deride the profession and its tools, its real-world prospects grow apace. Indeed, the treatment of children, now most controversial, is precisely where the best jobs are. Why I wonder? Pharmaceutical behemoths stoking demand? Post-imperial, recessionary American malaise? Perhaps it is also related to the increasing pressure on primary care doctors, who just can't handle the huddled masses of the unhappy.

Sunday, September 19, 2010

Murray Bail's The Pages

In Murray Bail's The Pages (NYT review here), two women, one a philosopher and the other a psychoanalyst, drive into the Australian hinterland so that the former may appraise the unknown work of a reclusive self-styled philosopher who has died, leaving his work in disarray in the austere corrugated steel shed where he labored for years.

So far the story itself sounds austere, but Bail's short novel is told briefly and impressionistically. Within the framework of the mystery of Wesley Antill, of his life and his life's work, philosophy and psychology as competing ways of being and knowing are set in relief.

That Bail, previously unknown to me but clearly an assured and sophisticated writer, trots out certain well-worn stereotypes makes me wonder if he didn't do so knowingly, as if defying the stigma of stereotyping or implying that there is more truth in such than we would care to admit. For we meet the flaky psychoanalyst, who has affairs with married men (and at least once in the past, with a client) and who manages to come across as both curious and self-absorbed. Her ambivalent friend is the detached, vaguely awkward, Aspergers-ish philosopher. Both of these are juxtaposed with the tough, taciturn ways of the sheep farmers (Antill's brother and sister) whom they meet on their errand.

Of course, philosophy and psychology do not exist in simple contrast or parallel. Philosophy is seen to have crucial emotional and biographical functions, whereas psychology makes truth claims, all too often unexamined. But Bail is obviously not interested here in academic arguments, but in philosophy and psychology as differing ways of being in the world, which Bail strikingly links to the physical environment:

Hot barren countries--alive with natural hazards--discourage the formation of long sentences, and encourage instead the laconic manner. The heat and the distances between objects seem to drain the will to add words to what is already there. What exactly can be added? "Seeds falling on barren ground"--where do you think that well-polished saying came from?

It is the green smaller countries in the northern parts of the world, cold, dark complex places, local places, with settled populations, where thoughts and sentences (where the printing press was invented!) hae the hidden urge to continue, to make an addition, a correction, to take an active part in the layering. And not only producing a fertile ground for philosophical thought; it was of course an hysterical landlocked country, of just that description, where psychoanalysis itself was born and spread.

It would appear that a cold climate assists in the process. The cold sharp air and the path alongside the rushing river.

In Bail's telling, here and elsewhere, philosophy (even if it is thoroughly naturalistic) has an otherworldly aspect; that is, it can only deal with deeply human problems, yet it seeks to distance itself from its human roots, becoming suspicious of language itself and attempting to break into some situation of truth above or beyond. It thrives in barren (mental and physical) landscapes, whether everything extraneous is put aside. It is unclear whether the enterprise is heroic or pathological. Elsewhere he writes:

"Too much light is fatal for philosophical thought." But some light is necessary.

That is, philosophy is about clarity, but total illumination lays bare the questionable motives of philosophy itself. Philosophy can only seek its own justification as a cat chases its tail. Yet one comes away from the book with the impression that psychoanalysis, while stemming from an honorable impulse to know oneself, is forever losing its way in acts of self-indulgent navel-gazing in cluttered, verbose interactions. Like I said, stereotypes--there it is, that idea again.

This is an intriguing fly-by view of philosophy--see its towering peaks and desert expanses--and psychology--see the buildings crowded into the hillsides, with people busily moving to and fro. Overarching it all is a book like this, the work of the imagination.

Friday, September 17, 2010

Is Science Interesting?

(The above purports to be a Chinese periodic table; if the characters instead express sentiments hilarious or profane, the more fool me).

Over at 13.7 Ursula Goodenough eloquently makes the case for scientific understanding as a civic and spiritual duty--it is not enough that we admire or respect nature, as individuals we must know how she ticks, at least in basic terms. She recognizes that such knowledge not only doesn't always come naturally or easily--often it is actively resisted. And yet she is not primarily interested in science as a means to technology.

It seems to me that curiosity is to science what speech is to the written word. Curiosity is spontaneous and natural, whereas science is part of culture and must be taught to successive generations. And while humans are inherently inquisitive about the physical world, we tend to be most curious about those environmental aspects that affect us most directly, and even so, our interest in social matters of love, violence, power, and gossip is often stronger still.

Is science necessary, any more than the invention of writing was necessary, or are both these merely contingent? Verbally and scientifically literate cultures are not superior to oral and pre-scientific ones, or if we consider them so, it is only because that is the water that we swim in. The most straightforward importance of science is its enabling us to manipulate the physical environment; when we wring our hands about the state of math and science education in this country, this is no high-minded spiritual concern, but rather worry that other nations may develop competitive technologies ahead of us.

I think that beyond the salience of science as means to other ends, it offers two other enticements, one spiritual and the other, for lack of a better term, I would call the human pleasure of puzzle-solving. These sometimes contrasting satisfactions shed light on different cognitive styles.

Science performs certain spiritual functions in relation to the physical world, functions pertaining to origins, essences, regularity, and plenitude. Science teaches us, in a manner dependent on logic and replicated experiment and not dogma, that there is far more to reality than what our immediate senses may perceive, from the microscopic to the cosmic and to the extent and variety of the living world. Science reassures insofar as it shows that reality obeys laws rather than whimsy, endowing the universe with a sublimity beyond that achieved by art or religion. However, beyond a certain point, details do not matter so much; I can appreciate the grand implications of evolution without a thorough acquaintance with the development of snails over two billion years.

The spiritual offerings of science go only so far. Over the years I have often had an intense interest in science, but I never felt a temptation to become a scientist. I majored in chemical engineering and then chemistry before realizing that I was not enough of a puzzle-solver to do science. I found scientific research to be soul-crushingly dull.

Many people (fortunately) take delight in how things work, either mechanically or verbally. They get a kick out of Rubik's cubes, crossword puzzles, or detective stories. My mind never really worked that way--I am naturally drawn to semantic, verbal, emotional and narrative insights. Neither mindset is superior, and I'm sure it's no evolutionary accident that human nature encompasses these two ends of a spectrum.

To be sure, I "got" science well enough to get into and through medical school without a problem, and it is wondrous that a biological system generates the insights I'm interested in, but the existential condition of suffering is what engaged me in the first place; the mere details of neuroscience are not, finally, compelling. That is, neuroscience does provide self-knowledge, but it is not the primary or even the best source, and it says nothing about how one should live.

And yet one might object: isn't psychiatry or psychotherapy like puzzle-solving? Not really. Yes, it is about pattern recognition, but it is not about arriving at a final aha! moment at which all the ambiguities drop away and nature stands revealed. Psychological treatment is an amalgam of personal history, emotional hermeneutics, and biotechnology in the service of existential goals and values.

Goodenough makes excellent points in her piece, but I think she protests too much. As a scientist and a puzzle-solver, she is baffled that many are not similarly wired. She ascribes it to some degree to fears of reductionism, but I think this applies only to a few scientific issues, such as evolution or neuroscience inasmuch as many see them as opposed to God or the soul.

Otherwise science is a specialized and contingent preoccupation. I am glad that I understand that plants grow not because spirits in the ground are telling them to, but because they convert solar energy into carbohydrates via photosynthesis in their leaves. However, the precise chemical equations involved, unless or until they are practically imperative for me to know, I am content to leave to the scientists.

Wednesday, September 15, 2010

The Dragon's Hoard

"Man is hungry for beauty. There is a void."

Oscar Wilde (via review by Arthur Krystal)

I always thought of dragons, the greatest and darkest creatures of Faerie, chiefly as collectors and connoisseurs, not primarily as plunderers or marauders. Their treasures would be rich, strange, and obscure, gained as much by study and ingenuity as by brute force or fire. The trove under the mountain promised a hidden plenitude.

As I think more about Paul Bloom's How Pleasure Works, and his theory of the "life force" embodied in art works and artifacts, it occurs to me that this life force really consists of attachment, to a transcendent Other that is an artist or other cathected individual. The memento is a repository of attachment. If money is about power and freedom, collected items are about connection and the gravity of history.

In Plato's "Symposium" Socrates presents a theory of erotic love as a deficit state, as the craving of an inherently incomplete entity. Aristotle wrote that the solitary man is either a beast or a god (and I don't think he saw men as gods). No matter how we attempt to defend against it, need is the default state of humanity. This need is best satisfied by relationships, but even those with abundant relationships maintain a system of stored attachments in the form of memorabilia, whether in the form of photographs, letters, books, or other valued items.

Having a romantic connotation of the dragon's hoard from childhood, I found it jarring for a while to hear of hoarding as a hallmark of pathology. How indeed does one shape and prune one's network of keepsakes? This is a deeply personal art. Just as, per Samuel Johnson, one should keep one's friendships in constant repair, so should one maintain one's personal record of attachments.

This art is distinguished by discrimination. Just as we are dismayed by those who would cast away every book, photograph, and card, we are appalled by those who cast away nothing. Just as he who loves everyone arguably loves no one (in particular), so he who keeps everything has become blind to relative value. In the case of hoarding the "life force" has become clotted and stagnant; a natural need has defeated its own purpose. If Thoreau was right that one is wealthy in proportion to what one can do without, it is also the case that human beings are those animals in need of apparent superfluity.

Entitlements High and Low

James Ledbetter at Slate describes the explosion of Social Security Disability Insurance coverage in recent decades, to the current rate of 4% of the potentially working population, as high as 6% in some states. The two most common kind of conditions covered? Mental disorders and musculoskeletal injuries (think lower back pain), both of which are notoriously elastic. Both are often quite real and severe, but surely these soaring disability rates are driven by non-clinical factors as well.

I work at one clinic at which virtually all the patients (or "consumers" as they are designated in the community mental health setting) either have disability status or are assiduously seeking it. They openly compare notes on how best to get a "crazy check" and to retain it long-term (patients often resist reduction or removal of medications in the belief that it may make them appear less disabled). Before they learn the system they are surprised to learn that I am not directly involved in the process--Social Security has its own protocol for determining disability. The bar is set apparently high--people are often turned down multiple times over a period of years, but repeated appeals especially with legal support usually seem to succeed, and once attained, disability is rarely lost, at least in the population I see.

Obviously many warrant disability support whatever the circumstances. But even among the questionable ones it is no simple matter of conscious malingering. Between $500 and $1000 per month isn't much, but it compares well with full-time minimum-wage work, which is the best that many disability candidates could hope for. And as Ledbetter mentions in his piece, disability rates may reflect long-term weakness in the job market that could be worse than feared. If someone with any mental symptoms whatsoever is chronically unable to find a job, his/her natural inference may come to be that s/he is disabled by those symptoms. Certainly I have seen this deduction at work (so to speak) in many young, quite able-bodied men in rural areas who have lost once abundant construction jobs. I was pleased to see that Ledbetter also produced the argument (as I did in a post last week) that increasingly technical jobs may be out of reach of a greater fraction of the labor pool.

Meanwhile, at the other end of the socioeconomic spectrum, NPR's health blog, citing a JAMA study, looks at reasons why doctors accept pharmaceutical company perks despite well-known concerns about conflicts of interest. The most common reason appears to be that they believe that they deserve them. This sounds familiar from my years of trying educate residents and medical students about the ethical issues involved. The latter just could not compete in general with their conviction that as they were working hard and--at that point in their careers anyway--not mind-bogglingly well-compensated, they were in fact entitled to a free lunch. One man's entitlement is another man's just deserts (or just desserts as the case may be).

The suppression of freeloading is the ultimate evolutionary game of whack-a-mole.

I work at one clinic at which virtually all the patients (or "consumers" as they are designated in the community mental health setting) either have disability status or are assiduously seeking it. They openly compare notes on how best to get a "crazy check" and to retain it long-term (patients often resist reduction or removal of medications in the belief that it may make them appear less disabled). Before they learn the system they are surprised to learn that I am not directly involved in the process--Social Security has its own protocol for determining disability. The bar is set apparently high--people are often turned down multiple times over a period of years, but repeated appeals especially with legal support usually seem to succeed, and once attained, disability is rarely lost, at least in the population I see.

Obviously many warrant disability support whatever the circumstances. But even among the questionable ones it is no simple matter of conscious malingering. Between $500 and $1000 per month isn't much, but it compares well with full-time minimum-wage work, which is the best that many disability candidates could hope for. And as Ledbetter mentions in his piece, disability rates may reflect long-term weakness in the job market that could be worse than feared. If someone with any mental symptoms whatsoever is chronically unable to find a job, his/her natural inference may come to be that s/he is disabled by those symptoms. Certainly I have seen this deduction at work (so to speak) in many young, quite able-bodied men in rural areas who have lost once abundant construction jobs. I was pleased to see that Ledbetter also produced the argument (as I did in a post last week) that increasingly technical jobs may be out of reach of a greater fraction of the labor pool.

Meanwhile, at the other end of the socioeconomic spectrum, NPR's health blog, citing a JAMA study, looks at reasons why doctors accept pharmaceutical company perks despite well-known concerns about conflicts of interest. The most common reason appears to be that they believe that they deserve them. This sounds familiar from my years of trying educate residents and medical students about the ethical issues involved. The latter just could not compete in general with their conviction that as they were working hard and--at that point in their careers anyway--not mind-bogglingly well-compensated, they were in fact entitled to a free lunch. One man's entitlement is another man's just deserts (or just desserts as the case may be).

The suppression of freeloading is the ultimate evolutionary game of whack-a-mole.

Monday, September 13, 2010

Little Thoughts

I am steering clear of Big Thoughts today; sometimes one just has to write something, anything.

1. Whenever I despair of psychiatry's approximations, I am consoled by economics. Am I the only person who wearies of the endless liberal/conservative tug-of-war on taxes? Really, wouldn't one think that in 2010 it should be possible to empirically determine the optimal tax rate in terms of effect on economic stability and growth? No? And people are surprised we haven't figured out depression?

2. It is official, according to Thomas Friedman and David Brooks: the United States is in a national funk, 300 million slackers with respect to the values that made this country great. Is this surprising? Having achieved the greatest prosperity in the history of the world, and lacking a coherent antagonist (Islamic terrorism is more akin to organized crime than to an Evil Empire), complacency sets in. It is human nature. Is it necessary that not only the 20th, but also the 21st, be American centuries? When can we mutate into a more temperate version of Canada?

3. Paul Bloom's How Pleasure Works (Bob's review here) is an entertaining if unchallenging stroll through his pet theory of "essentialism," the human tendency to believe in and attach to unique and individual identities as opposed to interchangeable sets of properties. I particularly enjoy his discussions of objects such as artworks or even random possessions of celebrities that retain the transferrable "life force" of their originators. The collector (and bibiophile) in me loves this: one accumulates loved objects as a reservoir of life force, a tide that in the case of hoarders gets out of hand.

4. Check out Douglas Coupland's amusing "Dictionary of the Near Future"--I particularly like his "pseudoalienation" (technology as an intensification of the human, alas) and two varieties of melancholy.

1. Whenever I despair of psychiatry's approximations, I am consoled by economics. Am I the only person who wearies of the endless liberal/conservative tug-of-war on taxes? Really, wouldn't one think that in 2010 it should be possible to empirically determine the optimal tax rate in terms of effect on economic stability and growth? No? And people are surprised we haven't figured out depression?

2. It is official, according to Thomas Friedman and David Brooks: the United States is in a national funk, 300 million slackers with respect to the values that made this country great. Is this surprising? Having achieved the greatest prosperity in the history of the world, and lacking a coherent antagonist (Islamic terrorism is more akin to organized crime than to an Evil Empire), complacency sets in. It is human nature. Is it necessary that not only the 20th, but also the 21st, be American centuries? When can we mutate into a more temperate version of Canada?

3. Paul Bloom's How Pleasure Works (Bob's review here) is an entertaining if unchallenging stroll through his pet theory of "essentialism," the human tendency to believe in and attach to unique and individual identities as opposed to interchangeable sets of properties. I particularly enjoy his discussions of objects such as artworks or even random possessions of celebrities that retain the transferrable "life force" of their originators. The collector (and bibiophile) in me loves this: one accumulates loved objects as a reservoir of life force, a tide that in the case of hoarders gets out of hand.

4. Check out Douglas Coupland's amusing "Dictionary of the Near Future"--I particularly like his "pseudoalienation" (technology as an intensification of the human, alas) and two varieties of melancholy.

Sunday, September 12, 2010

Conundrums and Credulity

A couple of recent links usefully examine the empirical and moral implications of religious belief. Tim Crane considers the ambiguous relation of faith and evidence, pointing out that while a demanding need for evidence for God is seen to reflect a fragile faith, it is nonetheless the case that religion collapses without a crucial intersection between the supernatural and the historical record.

John Cottingham, reviewing books by Mark Johnston and Andre Comte-Sponville (unread by me), suggests the paradoxical moral effects of specific belief. His review reminds us that belief in a personal God, with its implied promises of salvation and heavenly reward, cannot escape entirely the taint of idolatry and self-interest. The highest, most heroic spirituality would be that which conducts itself as if God existed while relinquishing any actual belief that this is the case. That is, the truest Christian, even if it could somehow (impossibly) be conclusively demonstrated that Jesus Christ was merely a man, would go on just as before.

However, Cottingham argues, in a way that is probably familiar to readers of this blog, that without the existential anchor of actual theistic belief, religion loses all specific content and disintegrates into well-meaning but empty notions of the life force. And worse, he claims that the project of a fully secular ethics is ultimately doomed, that without the foundation of communal faith underlying Western civilization, the elaborate complexities of Locke, Kant, etc. have no binding force.

This introduces a paradox of morality: so long as it is buttressed by a personal God (who by implication may dole out rewards and punishments), it is primitive and tribal in nature, but if God is jettisoned, morality becomes metaphysically optional. Cottingham clearly prefers the former as the lesser of two evils. Of those who, like myself, are non-believers yet do not routinely indulge our worst impulses, he would presumably say that we are either merely timid or are ungratefully coasting on a sense of decorum stemming ultimately from the Western faith we profess to be unmoved by.

I guess I see secular morality as a historically novel trend, as unprecedented in our ancient evolutionary history as, say, scrupulous regard for the welfare of animals or acceptance of antipodal human beings as something other than targets for conquest. I think that the emotional roots of religion are biologically deep, but that they can find other soil than historical theism. After all, the historical specificity of faith accentuates the paradox of religious tolerance: if a Christian truly believed that a Muslim's tradition were as valid as his own, he would have no compelling reason to remain a Christian. Instead, he consents to respect the Muslim point of view, but for reasons of social concord that lie outside of faith itself; for monotheistic gods are inherently jealous.

But to return to Crane's point about history and faith, it is important to recall that belief cannot avoid being mediated through and through, as the crucial roles of Scripture and the Koran reveal. When I say that I do not believe in Allah or the Christian God, I am really saying that I do not believe that the assembled writings of various Middle Easterners of 1300 or 1800 years ago reveal anything more than compendia of hearsay. It is not God that I disbelieve in; it is a specific historical product of deeply fallible humanity that I disbelieve in. Similarly, those who profess to believe actually subscribe to a particular and all-too-human historical tradition, in the absence of which religion sublimates into a vapor of vague feeling.

It could also be said that I (presumptively perhaps) await the one and true religion, which will come after all of these false starts. I propose a return to the Egyptians, those worshippers of cats and of the sun.

Thursday, September 9, 2010

The Analogy with Education

Briefly and speculatively this morning, at a friend's behest I've been reading Robert Whitaker's Anatomy of an Epidemic, another entry in the thriving anti-psychiatry industry. I am still early in the book, but so far it is, for its genre, a sober attempt to answer the question of why, after decades of intensive research, the problem of mental illness and its attendant disability appear to be, if anything, worse than ever. A hypothetical answer came to me when I read Robert J. Samuelson's suggestion about educational reform in Newsweek.

Samuelson observes that the United States has been in educational "crisis" for decades now, yet despite the application of massive resources and large numbers of teachers (and immeasurable pedagogical ingenuity), average academic performance has not significantly budged. He makes the not very politically correct claim that the problem is no longer the educational system, it is the students, or at least the kinds of students that the culture at large now produces. He adduces two factors: the increasingly anti-intellectual and autonomous culture of adolescence, and the huge increase in educational access in an increasingly technological and sophisticated society. In a nutshell, the educational system increasingly aspires to wring blood from a stone.

Human beings naturally vary in cognitive skills, concentration, etc. and for most of human history only a minority of the population endured extended formal schooling. The past generation or so has been the first experiment in population-wide education, and it could be that the system is coming up against natural human variability. In the past those who, whether due to lack of opportunity or aptitude, could not obtain extensive education were able to find niches in agricultural or other basic functions that are now increasingly occupied by machines. In an economy increasingly requiring advanced and specialized skills, niches are more competitive and harder to come by. Thus the uneducated are, relatively speaking, more disabled than in times past.

It could be that mental disorders, except perhaps for the most severe forms (the exact boundaries of which are still not determined), are not so much discrete entities as they are one end of the bell curve in terms of parameters such as mood, anxiety, attention, and reality testing. They may be analogous to learning disabilities inasmuch as deficits in stress tolerance, mood stability, and sustained attention increasingly place persons at greater disadvantage. Contemporary society is not so much provoking these problems as it is making them more apparent, that is, revealing natural human variability in the same way that, say, a basketball camp highlights differences in jumping ability. Because these capacities are complex and developmental, they are not easily modified.

Just as in centuries past, the academically challenged found niches, so those with anxiety and mood disorders may have been able to gravitate to settings that accomodated their symptoms. They found rural occupations permitting distance from people; they found solace in church; they were able to obtain support from family. But in our more mobile and dispersed society, the only jobs available are often intensely stressful, requiring constant contact with people even if only on a telemarketer's line. Individuals who used to rely on family increasingly have to resort to official disability status. It is not the case that psychological disability is new or unprecedented--it is just that there is nowhere for it to hide.

ADHD is obviously the place at which educational and psychiatric challenges intersect, and to my mind it is the perfect example of a hypercompetitive, ambitious post-industrial society illuminating natural and evolutionary variation in a human cognitive capacity, in this case attention and impulse control. The fact that distractibility, like the ability to metabolize (then scarce) calories parsimoniously, was adaptive for much of human evolution, does not unfortunately mean that it is adaptive now. The effort to suppress obesity, like much of psychiatry, may be an instance of cultural evolution, one that appears to have a lot of bumps in the road.

Samuelson observes that the United States has been in educational "crisis" for decades now, yet despite the application of massive resources and large numbers of teachers (and immeasurable pedagogical ingenuity), average academic performance has not significantly budged. He makes the not very politically correct claim that the problem is no longer the educational system, it is the students, or at least the kinds of students that the culture at large now produces. He adduces two factors: the increasingly anti-intellectual and autonomous culture of adolescence, and the huge increase in educational access in an increasingly technological and sophisticated society. In a nutshell, the educational system increasingly aspires to wring blood from a stone.

Human beings naturally vary in cognitive skills, concentration, etc. and for most of human history only a minority of the population endured extended formal schooling. The past generation or so has been the first experiment in population-wide education, and it could be that the system is coming up against natural human variability. In the past those who, whether due to lack of opportunity or aptitude, could not obtain extensive education were able to find niches in agricultural or other basic functions that are now increasingly occupied by machines. In an economy increasingly requiring advanced and specialized skills, niches are more competitive and harder to come by. Thus the uneducated are, relatively speaking, more disabled than in times past.

It could be that mental disorders, except perhaps for the most severe forms (the exact boundaries of which are still not determined), are not so much discrete entities as they are one end of the bell curve in terms of parameters such as mood, anxiety, attention, and reality testing. They may be analogous to learning disabilities inasmuch as deficits in stress tolerance, mood stability, and sustained attention increasingly place persons at greater disadvantage. Contemporary society is not so much provoking these problems as it is making them more apparent, that is, revealing natural human variability in the same way that, say, a basketball camp highlights differences in jumping ability. Because these capacities are complex and developmental, they are not easily modified.

Just as in centuries past, the academically challenged found niches, so those with anxiety and mood disorders may have been able to gravitate to settings that accomodated their symptoms. They found rural occupations permitting distance from people; they found solace in church; they were able to obtain support from family. But in our more mobile and dispersed society, the only jobs available are often intensely stressful, requiring constant contact with people even if only on a telemarketer's line. Individuals who used to rely on family increasingly have to resort to official disability status. It is not the case that psychological disability is new or unprecedented--it is just that there is nowhere for it to hide.

ADHD is obviously the place at which educational and psychiatric challenges intersect, and to my mind it is the perfect example of a hypercompetitive, ambitious post-industrial society illuminating natural and evolutionary variation in a human cognitive capacity, in this case attention and impulse control. The fact that distractibility, like the ability to metabolize (then scarce) calories parsimoniously, was adaptive for much of human evolution, does not unfortunately mean that it is adaptive now. The effort to suppress obesity, like much of psychiatry, may be an instance of cultural evolution, one that appears to have a lot of bumps in the road.

Monday, September 6, 2010

The Perfect Game

For the holiday, just a nod to David B. Hart's passionate paean to baseball, which he, tongue only obliquely in cheek, proposes as the most uniquely American contribution to culture, one that differs in kind and essence from those more cramped sports lumped together as "the oblong game:"

All of this, it seems to me, points beyond the game's physical dimensions and toward its immense spiritual horizons. When I consider baseball sub specie aeternatis, I find it impossible not to conclude that its metaphysical structure is thoroughly idealist. After all, the game is so utterly saturated by infinity. All its configurations and movements aspire to the timeless and the boundless. The oblong game is pitilessly finite: Wholly concerned as it is with conquest and shifting lines of force, it is exactly and inviolably demarcated, both spatially and temporally; having no inner unfolding narrative of its own, it does not end, but is merely curtailed, externally, by a clock (even overtime is composed only of strictly apportioned, discrete units of time).

__________________

No other game, moreover, is so mercilessly difficult to play well or affords such a scope for inevitable failure. We all know that a hitter who succeeds in only one-third of his at-bats is considered remarkable, and one who succeeds only fractionally more often is considered a prodigy of nature. Now here, certainly, is a portrait of the hapless human spirit in all its melancholy grandeur, and of the human will in all its hopeless but incessant aspiration: fleeting glory as the rarely ripening fruit of overwhelming and chronic defeat. It is this pervasive sadness that makes baseball's moments of bliss so piercing; this encircling gloom that sheds such iridescent beauty on those impossible triumphs over devastating odds so amazing when accomplished by one of the game's gods (Mays running down that ridiculously long fly at the Polo Grounds in the 1954 World Series, Ted Williams going deep in his very last appearance at the plate); and so hearbreakingly poignant when accomplished by a journeyman whose entire playing career will be marked by only onen such instant of transcendence (Ron Swoboda's diving catch off Brooks Robinson's bat in the 1969 series).

___________________

I am not nearly as certain, however, that baseball can be said to have any discernible religious meaning. Or, rather, I am not sure whether it reflects exclusively one kind of creed (it is certainly religious, through and through). Its metaphysics is equally compatible and equally incompatible with the sensibilities of any number of faiths, and of any number of schools within individual faiths; but, if it has anything resembling a theology, it is of the mystical, rather than the dogmatic, kind, and so its doctrinal content is nebulous. At its lowest, most cultic level, baseball is hospitable to such a variety of little superstitions and local pieties that it almost qualifies as a kind of primitive animism or paganism. At its highest, more speculative level, it tends toward the monist, as a consistent idealism must.

Saturday, September 4, 2010

Science Run Amok

Every art and every inquiry, and similarly every action and pursuit, is thought to aim at some good; and for this reason the good has rightly been declared to be that at which all things aim.

Aristotle (trans. W. D. Ross)

Over at Edge, Sam Harris writes on science as it may apply to morality. He raises many compelling issues, but his piece exemplifies the grievous attempt to extend the relentless rigor of science beyond its rightful purview. If nothing else, the article is worth reading for its sheer effrontery (leave aside the moral debates of three thousand years, it's really rather simple!).

Harris argues that the force of science may be brought to bear on the problem of morality in three respects, not only anthropologically (documentation of actual ethical practices), but also as regards the ends of morality (which he stipulates as the well-being of conscious creatures), and surprisingly the art of persuasion, that is, bringing the benighted into the orbit of the empirically right-thinking.

His method is to claim that since the methods of science (i.e. evidence, consistency, parsimony) depend on consensus, and morality depends on consensus, then they are parallel endeavors; that is, normative moral phenomena--the ways one should live--are objectively true or false in the fashion that physical states of affairs are true or false. In other words, one may dissent from scientific methods (appealing, presumably, to revelation or intuition) just as one may opt out of moral claims, but to do so, Harris implies, is to place oneself beyond the pale. He also draws an analogy with economics, suggesting that just as goods and services may (theoretically) be generated and distributed in an objectively optimal manner, so is there a neutral calculus of human well-being.

The problem is that one may recognize many modes of human well-being without falling into the mire of moral relativism. To be sure, we are not entirely adrift in ambiguity. It is uncontroversial, for instance, to suggest that the citizens of Switzerland are better off in absolute terms than those of North Korea, just as it is safe to say that Bach is superior to Kate Perry. However, these are extremes, and most moral distinctions are more subtle. Are Americans better or worse off on average than Germans? Is Bach superior to Schubert?